「 AI Jam Powered by NVIDIA 」

For the Nvidia AI Jam - OpenUSD Challenge - we had 48 hours to create digital content using OpenUSD and Omniverse. We worked with industry tools and faced real-world creative and techinical challanges. At the end of the challenge, we presented our proposals and work to the AI Summit panel and industry professinals. Our proposal was a fragrance branding production system that turns brand DNA into reusable assets and scalable campaign scenes. Using six Jo Malone–inspired concepts, we demonstrated a repeatable pipeline from brand → assets → multi-channel outputs.

Team Members

Len Mendoza

Pre-production

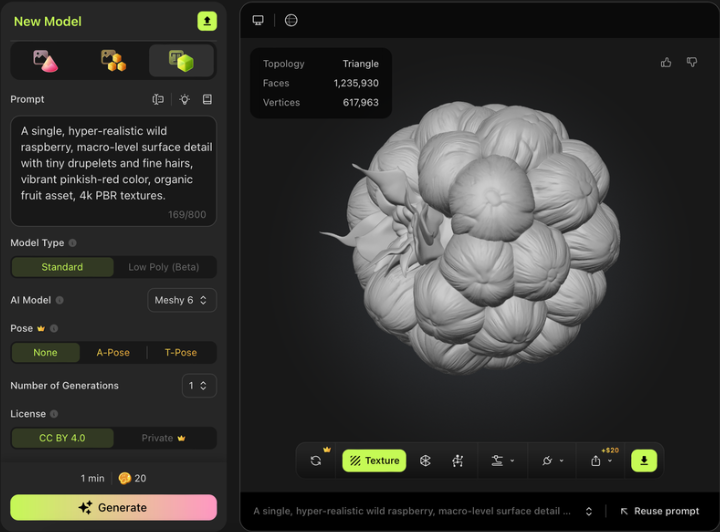

After deciding the general direction, we played around in Omniverse, figuring out how to install it into our personal engines. Then came the fun part, we decided on the perfumes we would be using in this project and what objects and materials. For our assets, we used Ai programs to create 3D models for us to cut down on time, since we only had 48 hours.

It took multiple tries to get models that we could use. We still had to do some clean up on our end before we could use it in our final product.

Development

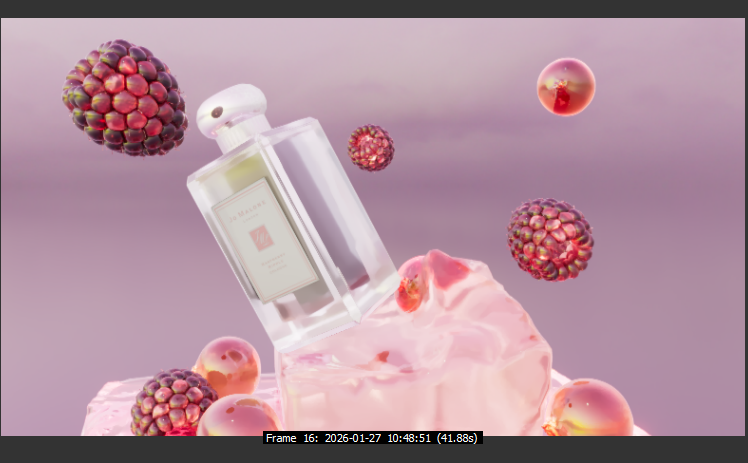

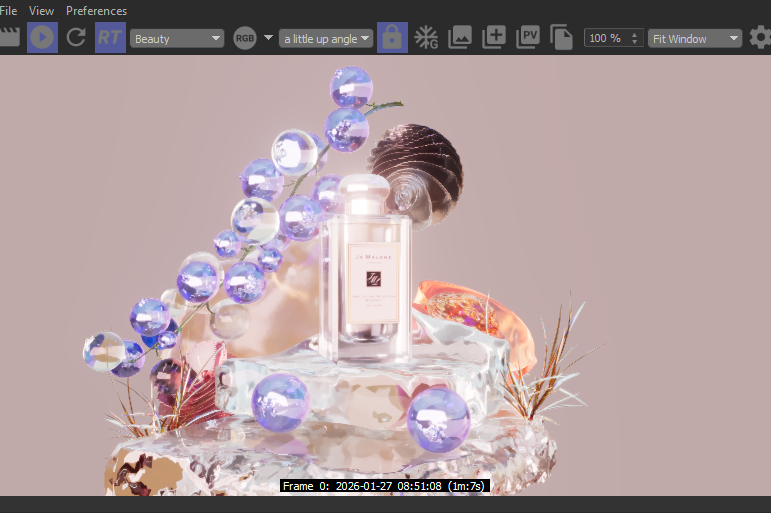

We divided the work amongst ourselves, with my perfume bottles being Jo Malone's "Nectarine Blossom and Honey" and "Raspberry Ripple."

We wanted some difference in aesthetics to show the potential with branding.

Production

Materials we created in Cinema4D's redshift renderer but had difficulties bringing in those materials into Omniverse. I tried a lot of trouble shooting with Xinran to prevent us from having to recreat the textures from scratch. We ended up doing 2 exports, one in Omniverse and the second in C4D to try to keep the Redshift materials.

This was my first rendition of "Rasberry Ripple," I was testing out mostly the lighting and framing of the bottle within the camera.

This was the final composition I landed on for the "Nectarine Blossom and Honey" perfume.

Afterthoughts...

In the end, this project really helped me understand the strengths and weakness of AI and how it can be used in the motion world to aid our development. I don't necessarily see it as a potential replacement. There is still a long way before AI can truly replicate what a motion designer can do.